Service Discovery is one of the key concepts for building a Service Oriented distributed system. Simply put, when service A needs to call service B, it first needs to find a running instance of B. Static configurations become inappropriate in the context of an elastic, dynamic system, where service instances are provisioned and de-provisioned frequently (planned or unplanned) or network failures are frequent (Cloud). Finding an instance of B is not a trivial task anymore.

Discovery implies a mechanism where:

Services have no prior knowledge about the physical location of other Service Instances

Services advertise their existence and disappearance

Services are able to find instances of another Service based on advertised metadata

Instance failures are detected and they become invalid discovery results

- Service Discovery is not a single point of failure by itself

Eureka Overview

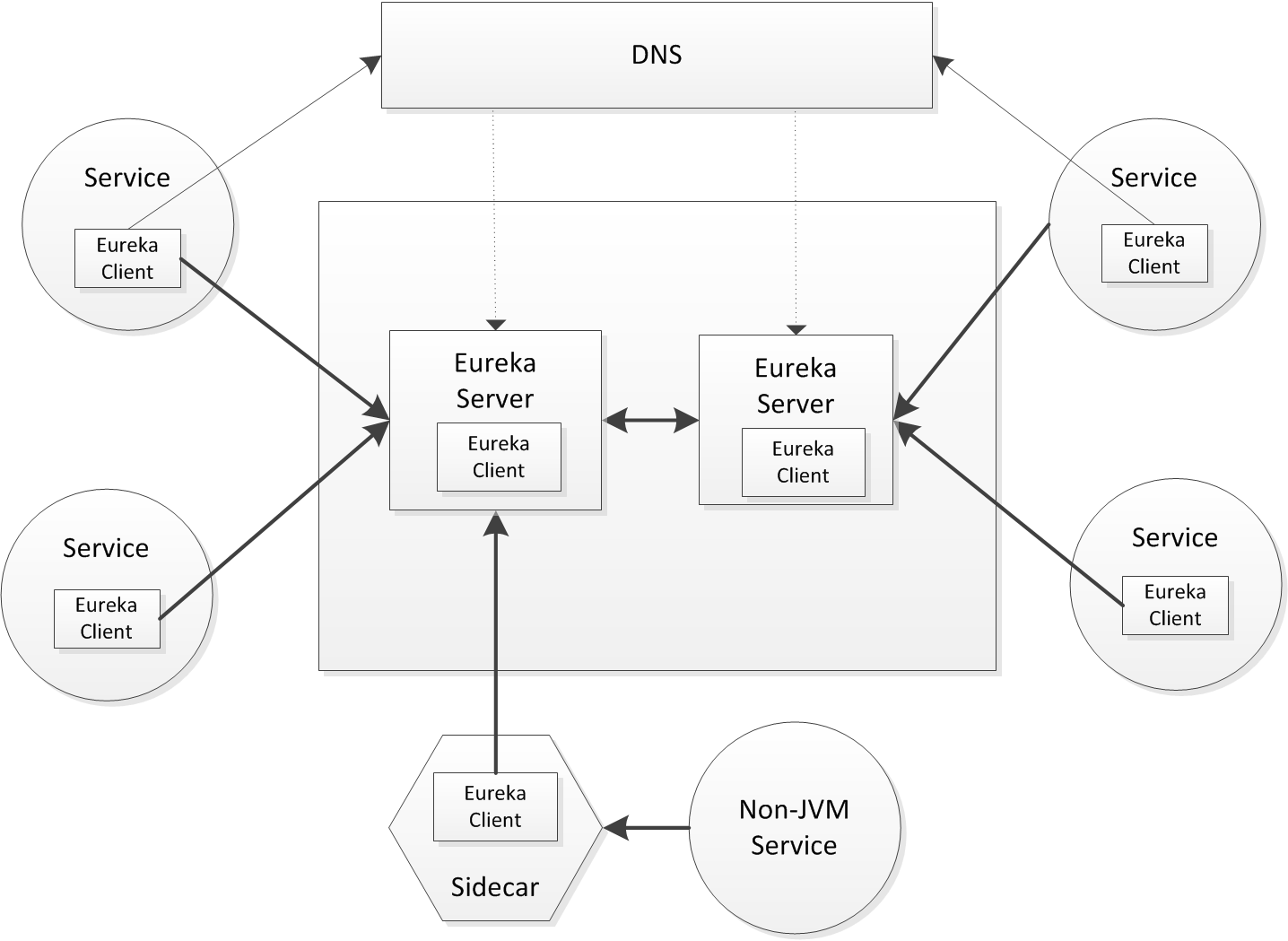

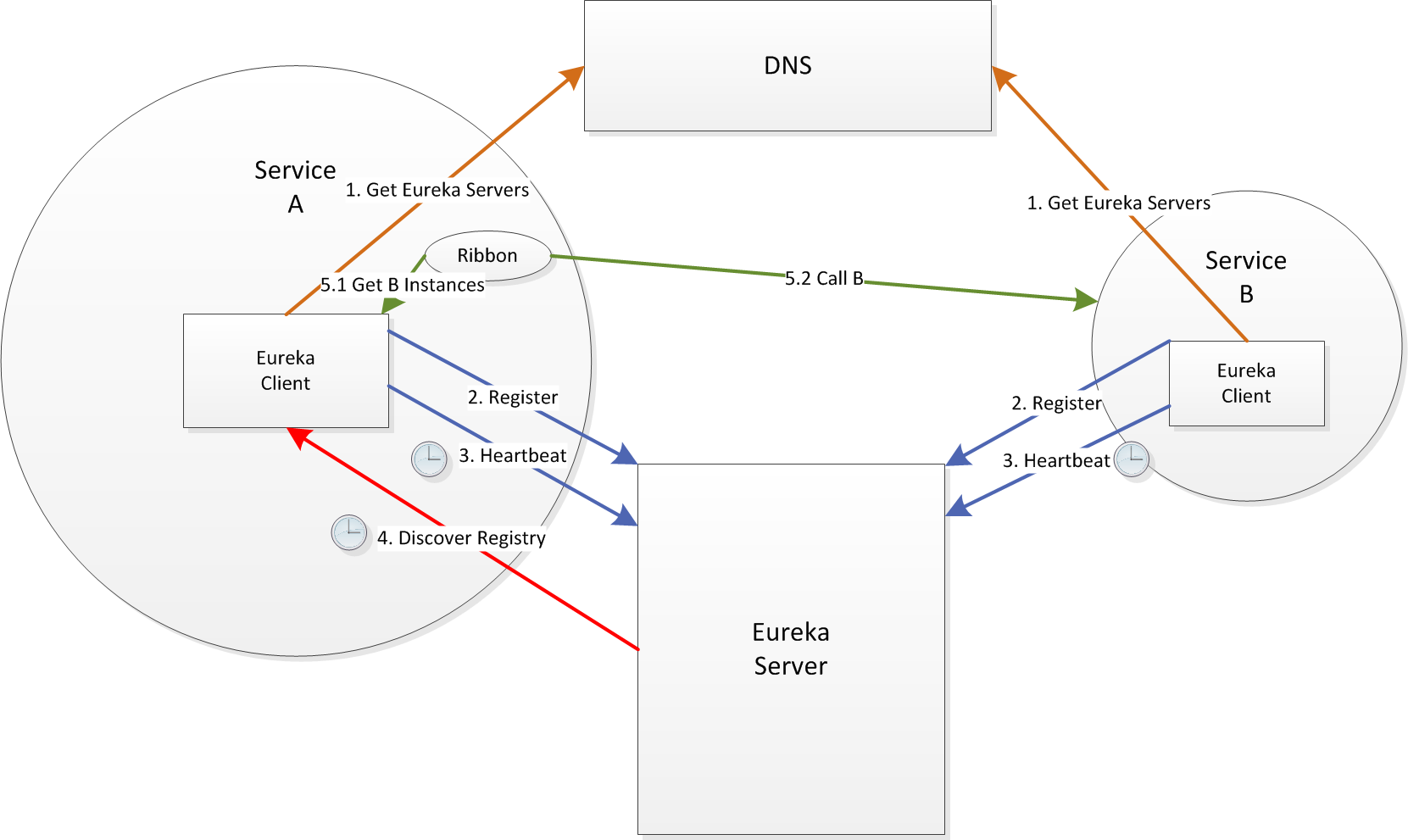

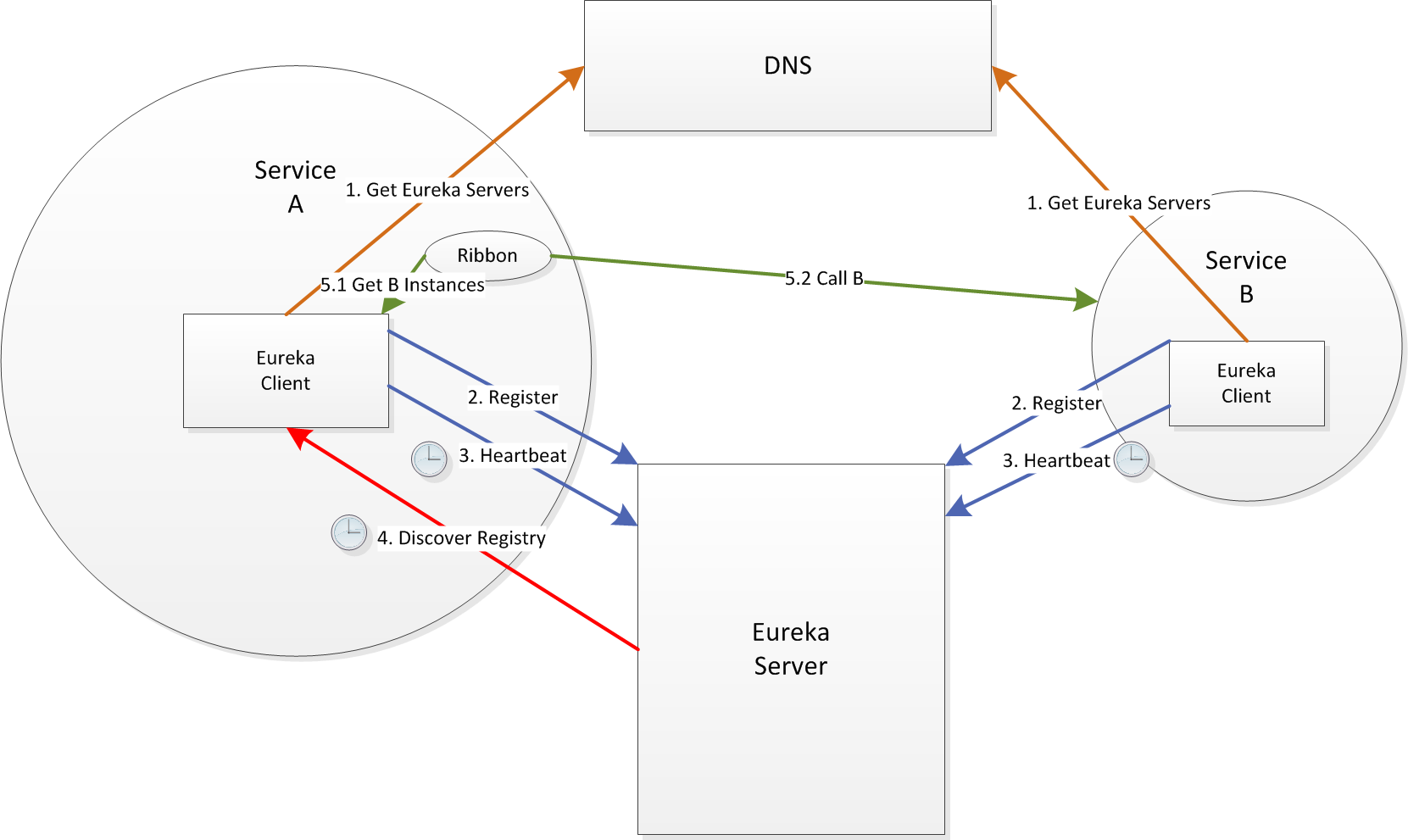

Netflix Eureka architecture consists of two components, the Server and the Client.

The Server is a standalone application and is responsible for:

managing a registry of Service Instances,

provide means to register, de-register and query Instances with the registry,

- registry propagation to other Eureka Instances (Servers or Clients).

The Client is part of the Service Instance ecosystem and has responsibilities like:

register and unregister a Service Instance with Eureka Server,

keep alive the connection with Eureka Server,

- retrieve and cache discovery information from the Eureka Server.

Discovery units

Eureka has notion of Applications (Services) and Instances of these Applications.

The query unit is the application/service identifier and the results are instances of that application that are present in the discovery registry.

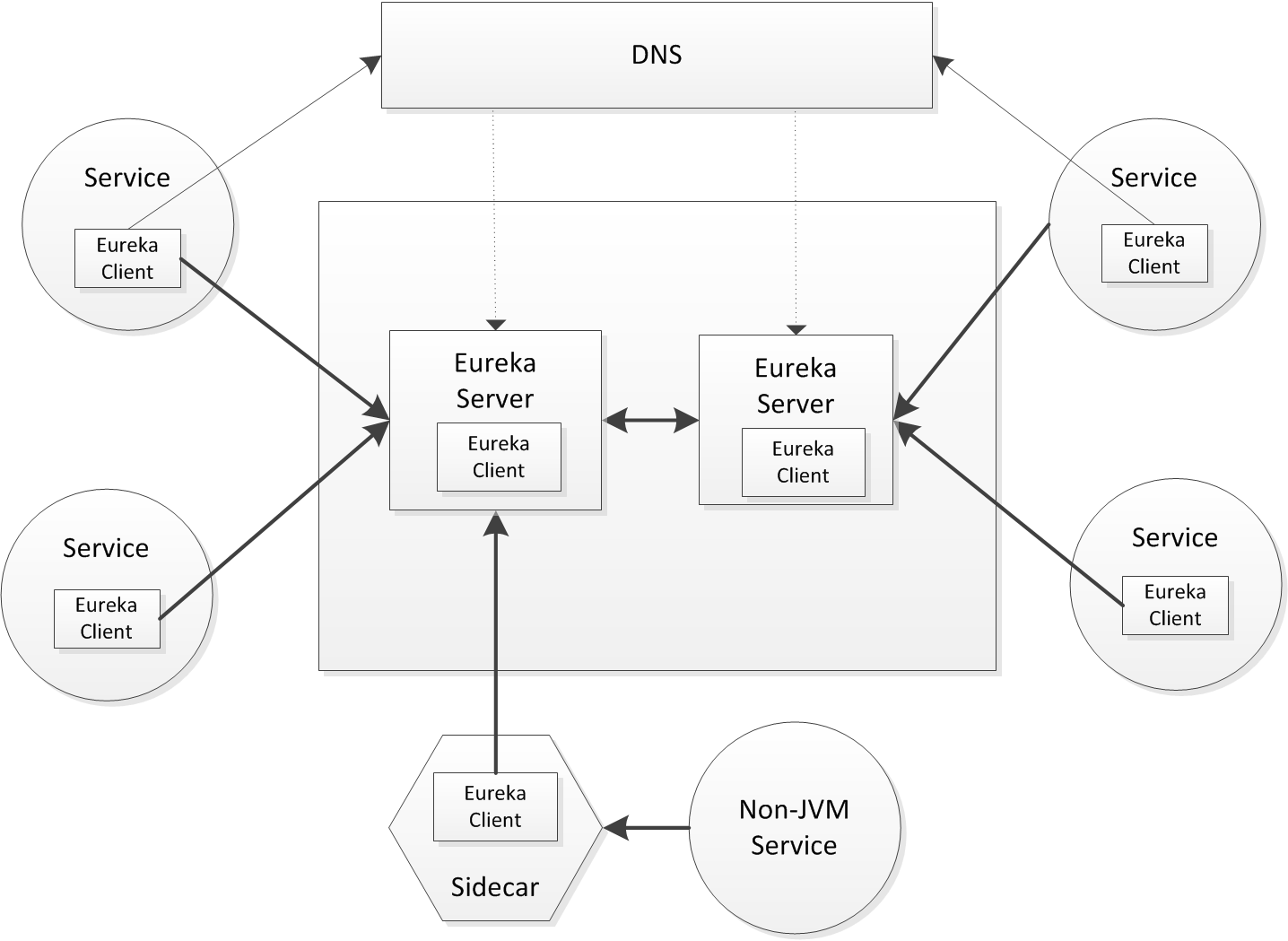

High Availability

Netflix Eureka is built for High Availability. In CAP Theorem terms, it favours Availability over Consistency.

The focus is on ensuring Services can find each other in unplanned scenarios like network partitions or Server crashes.

High Availability is achieved at two levels:

Clients retrieve and cache the registry information from the Eureka Server. In case all Servers crash, the Client still holds the last healthy snapshot of the registry.

Terminology

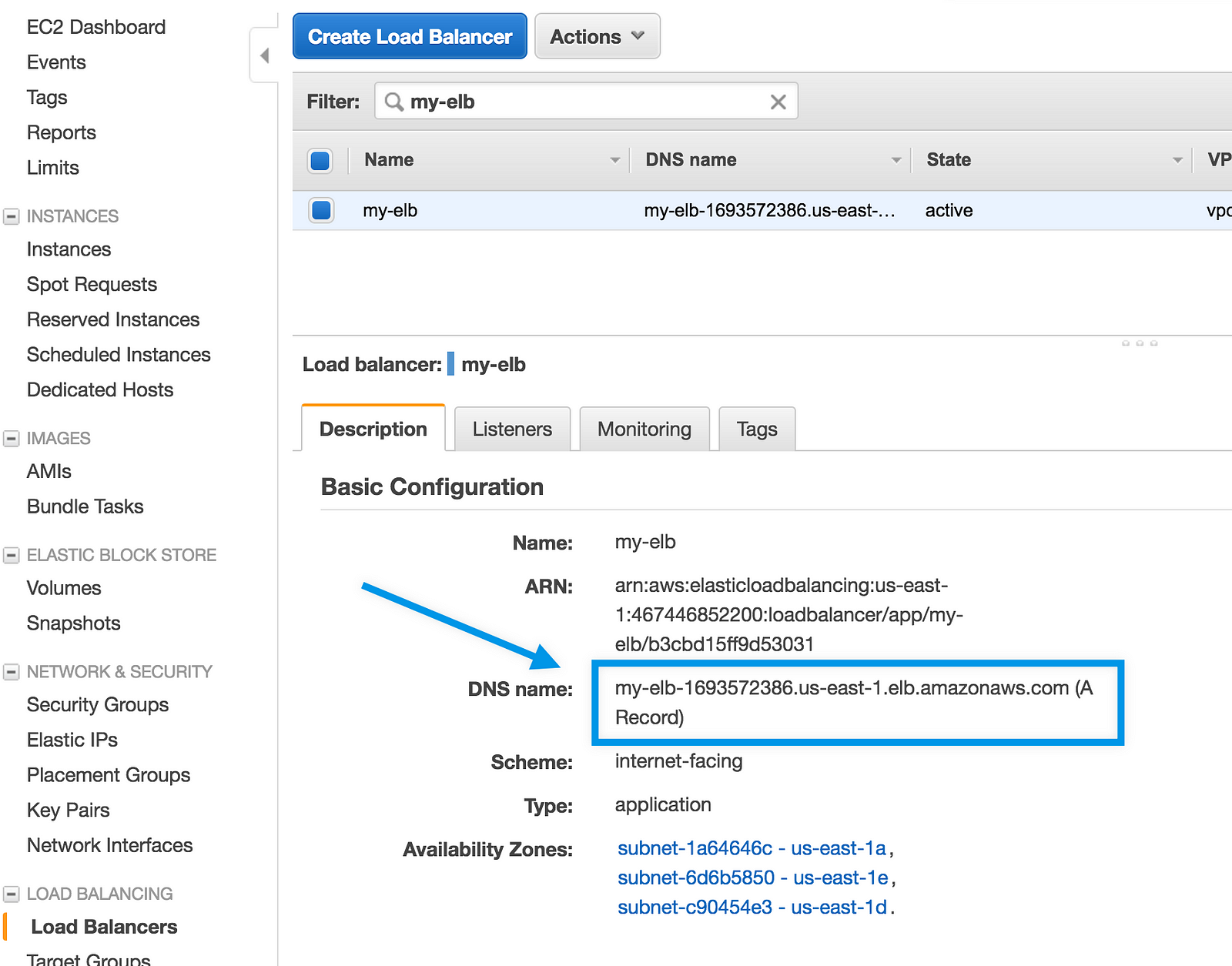

Eureka was built to work with Amazon Web Services (AWS). Therefore the terminology has references to AWS specific terms like regions, zones, etc. The examples presented in the article use the default region us-east-1 and the defaultZone.

Eureka Server

The Server is the actual Discovery Service in your typical SOA system. Start by cloning the Spring Cloud Eureka Sample github.com repository.

Standalone setup

It is easy to start an Eureka Server with Spring Cloud.

Any Spring Boot application becomes an Eureka Server by using the annotation `\@EnableEurekaServer`. Use the setup below for the local development machine.

Sample Eureka Server application:

@SpringBootApplication @EnableEurekaServer @EnableDiscoveryClient public class EurekaApplication { public static void main(String[] args) { SpringApplication.run(EurekaApplication.class, args); } }

Configuration yml:

server: port: 8761 security: user: password: ${eureka.password} eureka: password: ${SECURITY_USER_PASSWORD:password} server: waitTimeInMsWhenSyncEmpty: 0 enableSelfPreservation: false client: preferSameZoneEureka: false --- spring: profiles: devlocal eureka: instance: hostname: localhost client: registerWithEureka: false fetchRegistry: false serviceUrl: defaultZone: http://user:${eureka.password:${SECURITY_USER_PASSWORD:password}}@localhost:8761/eureka/

Start the application using the following Spring Boot Maven target:

mvn spring-boot:run -Drun.jvmArguments="-Dspring.profiles.active=devlocal"

The settings registerWithEureka and fetchRegistry are set to false, meaning that this Server is not part of a cluster.

Cluster setup

Eureka Servers are deployed in a cluster to avoid a single point of failure as a set of replica peers. They exchange discovery registries striving for Consistency. Clients don't need Server affinity and can transparently connect to another one in case of failure.

Eureka Servers need to be pointed to other Server instances.

There are different ways to do this, described below.

DNS

Netflix uses DNS configuration for managing the Eureka Server list dynamically, without affecting applications that use Eureka. This is the recommended production setup. Let's have an example with two Eureka Servers in a cluster, dsc01 and dsc02.

You can use Bind or other DNS server. Here are good instructions for Bind.

DNS configuration:

$TTL 604800 @ IN SOA ns.eureka.local. hostmaster.eureka.local. ( 1024 ; Serial 604800 ; Refresh 86400 ; Retry 2419200 ; Expire 604800 ) ; Negative Cache TTL ; @ IN NS ns.eureka.local. ns IN A 10.111.42.10 txt.us-east-1 IN TXT "defaultZone.eureka.local" txt.defaultZone IN TXT "dsc01" "dsc02" ;

Application configuration:

eureka: client: registerWithEureka: true fetchRegistry: true useDnsForFetchingServiceUrls: true eurekaServerDNSName: eureka.local eurekaServerPort: 8761 eurekaServerURLContext: eureka

Static servers list

Configuration for the above cluster:

eureka: client: registerWithEureka: true fetchRegistry: true serviceUrl: defaultZone: http://dsc01:8761/eureka/,http://dsc02:8762/eureka/

This is handy when running without DNS, but any change to the list requires a restart.

Multiple Servers on a single machine

Care is required when starting multiple Servers on the same machine. They need to be configured with different hostnames, different ports do not suffice. These hostnames must resolve to localhost. A way to do this is to edit the hosts file in Windows.

eureka: instance: hostname: server1

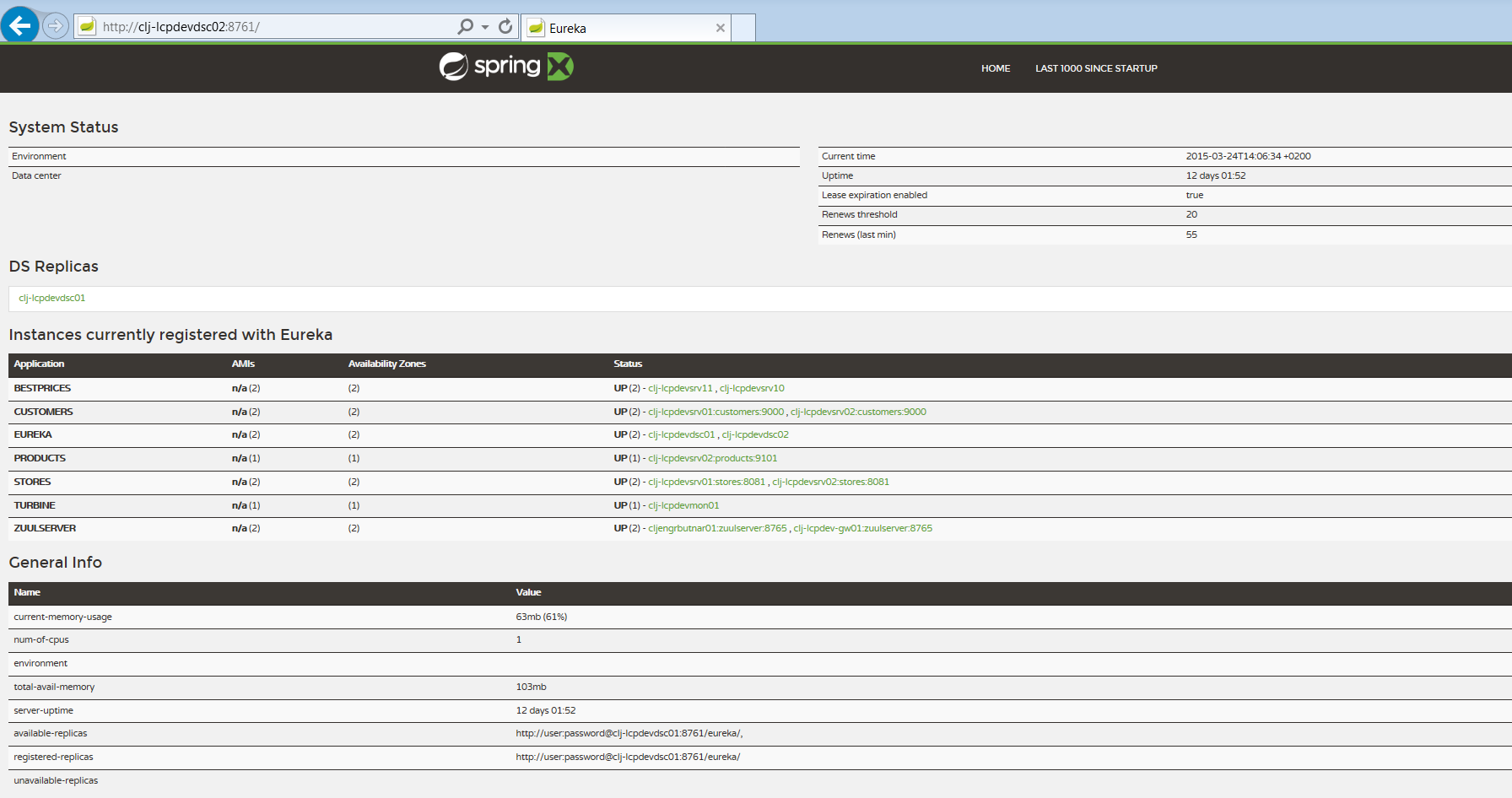

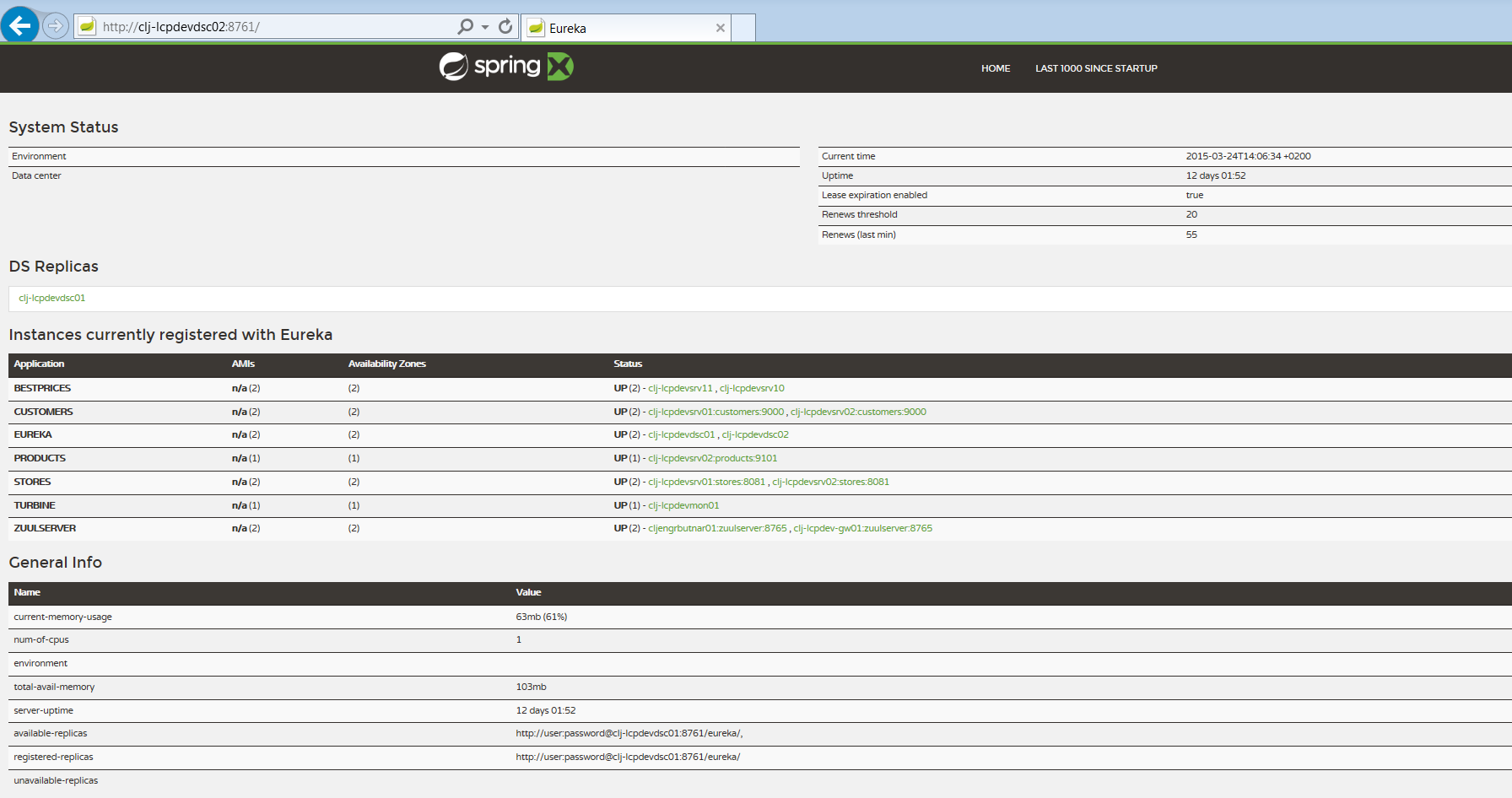

[Eureka Dashboard] Eureka Dashboard

[Eureka Dashboard] Eureka Dashboard

Graphic Web Interface

Eureka offers a web dashboard where the status of the Server can be observed. We have a sample application environment where we assess technologies.

We initially started with the Spring Cloud samples and modified them based on our needs.

The example below is an Eureka Server from our sample application environment:

XML/Text Web interface

More details can be retrieved in a text based interface:

<?xml version="1.0"?> -<applications> <versions__delta>1 </versions__delta> <apps__hashcode>UP_11_ </apps__hashcode> +<application> +<application> +<application> -<application> <name>BESTPRICES</name> +<instance> -<instance> <hostName>clj-lcpdevsrv10 </hostName> <app>BESTPRICES</app> <ipAddr>10.111.42.71</ipAddr> <status>UP</status> <overriddenstatus>UNKNOWN </overriddenstatus> <port enabled="true">8000</port> <securePort enabled="true">443 </securePort> <countryId>1</countryId> <dataCenterInfo class="com.netflix.appinfo.InstanceInfo$DefaultDataCenterInfo"> <name>MyOwn</name> </dataCenterInfo> -<leaseInfo> <renewalIntervalInSecs>30 </renewalIntervalInSecs> <durationInSecs>90</durationInSecs> <registrationTimestamp> 1426271956166 </registrationTimestamp> <lastRenewalTimestamp> 1427199350993 </lastRenewalTimestamp> <evictionTimestamp>0</evictionTimestamp> <serviceUpTimestamp>1426157045251 </serviceUpTimestamp> </leaseInfo> <metadata class="java.util.Collections$EmptyMap"/> <appGroupName> MYSIDECARGROUP</appGroupName> <homePageUrl>http://clj-lcpdevsrv10:8000/ </homePageUrl> <statusPageUrl>http://clj-lcpdevsrv10:8001/info </statusPageUrl> <healthCheckUrl>http://clj-lcpdevsrv10:8001/health </healthCheckUrl> <vipAddress>bestprices</vipAddress> <isCoordinatingDiscoveryServer>false </isCoordinatingDiscoveryServer> <lastUpdatedTimestamp>1426271956166 </lastUpdatedTimestamp> <lastDirtyTimestamp>1426271939882 </lastDirtyTimestamp> <actionType>ADDED</actionType> </instance> </application> +<application> +<application> +<application> </applications>

Instance info

The element "instance" above holds full details about a registered Service Instance.

Most of the details are self explanatory and hold information about the physical location of the Instance, lease information and other metadata. HealthCheck urls can be used by external monitoring tools.

Custom metadata can be added to the instance information and consumed by other parties.

Eureka Client

The Client lives within the Service Instance ecosystem. It can be used as embedded with the Service or as a sidecar process. Netflix advise the embedded use for Java based services and sidecar use for Non-JVM.

The Client has to be configured with a list of Servers. The configurations above, DNS and static list, apply for the Client too, since the Servers use the Client to communicate with each-other.

Any Spring Boot application becomes an Eureka Client by using the annotation "@EnableDiscoveryClient" and making sure Eureka is in the classpath:

@SpringBootApplication @EnableDiscoveryClient public class SampleEurekaClientApp extends RepositoryRestMvcConfiguration { public static void main(String[] args) { SpringApplication.run(CustomerApp.class, args); } }

Heartbeats

The Client and the Server implement a heartbeat protocol. The Client must send regular heartbeats to the Server. The Server expects these heartbeat messages in order to keep the instance in the registry and to update the instance info, otherwise the instance is removed from the registry. The time frames are configurable.

The heartbeats can also specify a status of the Service Instance: UP, DOWN, OUT_OF_SERVICE, with immediate consequences on the discovery query results.

Server self preservation mode

Eureka Server has a protection feature: in case a certain number of Instances fail to send heartbeats in a determined time interval, the Server will not remove them from the registry. It considers that a network partition occurred and will wait for these Instances to come back. This feature is very useful in Cloud deploys and can be turned off for collocated Services in a private data center.

Client Side Caching

One of the best Eureka features is Client Side Caching. The Client pulls regularly discovery information from the registry and caches it locally. It basically has the same view on the system as the Server. In case all Servers go down or the Client is isolated from the Server by a network partition, it can still behave properly until its cache becomes obsolete.

The caching improves performance since there is no more round-trip to a another location at moment the request to another service is created.

Usage

Eureka Discovery Client can be used directly or through other libraries that integrate with Eureka.

Here are the options on how we can call our sample Service bestprices, based on information provided by Eureka:

Direct

... @Autowired private DiscoveryClient discoveryClient; ... private BestPrice findBestPriceWithEurekaclient(final String productSku) { BestPrice bestPrice = null; ServiceInstance instance = null; List instances = discoveryClient .getInstances("bestprices"); if (instances != null && instances.size() > 0) { instance = instances.get(0); URI productUri = URI.create(String .format("http://%s:%s/bestprices/" + productSku, instance.getHost(), instance.getPort())); bestPrice = restTemplate.getForObject(productUri, BestPrice.class); } return bestPrice; }

Ribbon Load Balancer

Ribbon is a HTTP client and software load balancer from Netflix that integrates nicely with Eureka:

... @Autowired private LoadBalancerClient loadBalancerClient; ... private BestPrice findBestPriceWithLoadBalancerClient(final String productSku) { BestPrice bestPrice = null; ServiceInstance instance = loadBalancerClient .choose("bestprices"); if (instance != null) { URI productUri = URI.create(String .format("http://%s:%s/bestprices/" + productSku, instance.getHost(), instance.getPort())); bestPrice = restTemplate.getForObject(productUri, BestPrice.class); } return bestPrice; }

Non-JVM Service integration

This aspect is not extensively covered and may be the topic for another article.

There are two approaches: