SageMaker is an AWS-fully managed service that covers the entire workflow of Machine Learning. Using the SageMaker demo of AWS, we illustrate the most important relationships, basics and functional principles.

For our experiment, we use the MNIST dataset as training data . The Modified National Institute of Standards and Technology ( MNIST) database is a very large database of handwritten digits that is commonly used to train various image processing systems. The database is also widely used for machine learning (ML) training and testing. The dataset was created by "remixing" the samples from the original NIST dataset records .The reason for this is that the makers thought the NIST training dataset was not directly suited for machine learning experiments because it came from American Census Bureau staff and the test dataset from American students. In the MNIST database, the NIST's black-and-white images were normalised to a size of 28 x 28 pixels with anti- aliasing and grayscale values.

The MNIST database of handwritten digits currently includes a training set of 50,000 examples and a test set of 10,000 examples, a subset of the NIST dataset. The MNIST data foundation is well-suited for trying out learning techniques and pattern recognition methods to real data with minimal pre-processing and formatting.

In the following experiment, we set out to do the example listed here - https://github.com/prasanjit-/ml_notebooks/blob/master/kmeans_mnist.ipynb

The high level steps are:

- Prepare training data

- Train a model

- Deploy & validate the model

- Use the result for predictions

- Train a model

- Deploy & validate the model

- Use the result for predictions

Refer to the Jupiter Notebook in the Github repo for detailed steps- https://github.com/prasanjit-/ml_notebooks/blob/master/MNISTDemo.ipynb

The below is a summary of steps to create this training model on Sagemaker:

- Create an S3 bucket

Create an S3 bucket to hold the following -

a. The model training data

b. Model artifacts (which Amazon SageMaker generates during model training).

2. Create a Notebook instance

Create a Notebook instance by logging onto: https://console.aws.amazon.com/sagemaker/

3. Create a new conda_python3 notebook

Once created, open the notebook instance and you will be directed to Jupyter Server. At this point create a new conda_python3 notebook.

4. Specify the role

Specify the role and S3 bucket as follows:

from sagemaker import get_execution_rolerole = get_execution_role()

bucket=’bucket-name’

5. Download the MNIST dataset

Download the MNIST dataset to the notebook’s memory.

The MNIST database of handwritten digits has a training set of 60,000 examples.

%%time

import pickle, gzip, numpy, urllib.request, json# Load the dataset

urllib.request.urlretrieve(“http://deeplearning.net/data/mnist/mnist.pkl.gz", “mnist.pkl.gz”)

with gzip.open(‘mnist.pkl.gz’, ‘rb’) as f:

train_set, valid_set, test_set = pickle.load(f, encoding=’latin1')

6. Convert to RecordIO Format

For this example Data needs to be converted to RecordIO format — which is a file format for storing a sequence of records. Records are stored as an unsigned variant specifying the length of the data, and then the data itself as a binary blob.

Algorithms can accept input data from one or more channels. For example, an algorithm might have two channels of input data, training_data and validation_data. The configuration for each channel provides the S3 location where the input data is stored. It also provides information about the stored data: the MIME type, compression method, and whether the data is wrapped in RecordIO format.

Depending on the input mode that the algorithm supports, Amazon SageMaker either copies input data files from an S3 bucket to a local directory in the Docker container, or makes it available as input streams.

Manual Transformation is not needed since we are following Amazon SageMaker’s Highlevel Libraries fit method in this example.

7. Create a training job

In this example we will use the Amazon SageMaker KMeans module.

From SageMaker, import KMeans as follows:

data_location = ‘s3://{}/kmeans_highlevel_example/data’.format(bucket)

output_location = ‘s3://{}/kmeans_example/output’.format(bucket)print(‘training data will be uploaded to: {}’.format(data_location))

print(‘training artifacts will be uploaded to: {}’.format(output_location))kmeans = KMeans(role=role,

train_instance_count=2,

train_instance_type=’ml.c4.8xlarge’,

output_path=output_location,

k=10,

data_location=data_location)

- role — The IAM role that Amazon SageMaker can assume to perform tasks on your behalf (for example, reading training results, called model artifacts, from the S3 bucket and writing training results to Amazon S3).

- output_path — The S3 location where Amazon SageMaker stores the training results.

- train_instance_count and train_instance_type — The type and number of ML EC2 compute instances to use for model training.

- k — The number of clusters to create. For more information, see K-Means Hyperparameters.

- data_location — The S3 location where the high-level library uploads the transformed training data.

8. Start Model Training

%%timekmeans.fit(kmeans.record_set(train_set[0]))

9. Deploy a Model

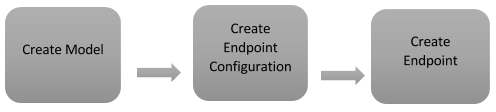

Deploying a model is a 3 step process.

- Create a Model — CreateModel request is used to provide information such as the location of the S3 bucket that contains your model artifacts and the registry path of the image that contains inference code.

- Create an Endpoint Configuration — CreateEndpointConfig request is used to provide the resource configuration for hosting. This includes the type and number of ML compute instances to launch for deploying the model.

- Create an Endpoint — CreateEndpoint request is used to create an endpoint. Amazon SageMaker launches the ML compute instances and deploys the model.

The High Level Python Library deploy method provides all these tasks.

%%timekmeans_predictor = kmeans.deploy(initial_instance_count=1,

instance_type=’ml.m4.xlarge’)

The sagemaker.amazon.kmeans.KMeans instance knows the registry path of the image that contains the k-means inference code, so you don’t need to provide it.

This is a synchronous operation. The method waits until the deployment completes before returning. It returns a kmeans_predictor.

10. Validate the Model

Here we get an inference for the 30th image of a handwritten number in the valid_set dataset.

result = kmeans_predictor.predict(train_set[0][30:31])

print(result)

The result would show the closest cluster and the distance from that cluster.

This video has a complete demonstration of this experiment.

Below is the set of commands that were executed and the results of the execution:

In [1]:

from sagemaker import get_execution_role

role = get_execution_role()

bucket = 'sagemaker-ps-01' # Use the name of your s3 bucket here

In [2]:

role

Out[2]:

'arn:aws:iam::779615490104:role/service-role/AmazonSageMaker-ExecutionRole-20191103T150143'

In [3]:

%%time

import pickle, gzip, numpy, urllib.request, json

# Load the dataset

urllib.request.urlretrieve("http://deeplearning.net/data/mnist/mnist.pkl.gz", "mnist.pkl.gz")

with gzip.open('mnist.pkl.gz', 'rb') as f:

train_set, valid_set, test_set = pickle.load(f, encoding='latin1')

CPU times: user 892 ms, sys: 278 ms, total: 1.17 s Wall time: 4.6 s

In [6]:

%matplotlib inline

import matplotlib.pyplot as plt

plt.rcParams["figure.figsize"] = (2,10)

def show_digit(img, caption='', subplot=None):

if subplot == None:

_, (subplot) = plt.subplots(1,1)

imgr = img.reshape((28,28))

subplot.axis('off')

subplot.imshow(imgr, cmap='gray')

plt.title(caption)

show_digit(train_set[0][1], 'This is a {}'.format(train_set[1][1]))

In [7]:

from sagemaker import KMeans

data_location = 's3://{}/kmeans_highlevel_example/data'.format(bucket)

output_location = 's3://{}/kmeans_highlevel_example/output'.format(bucket)

print('training data will be uploaded to: {}'.format(data_location))

print('training artifacts will be uploaded to: {}'.format(output_location))

kmeans = KMeans(role=role,

train_instance_count=2,

train_instance_type='ml.c4.8xlarge',

output_path=output_location,

k=10,

epochs=100,

data_location=data_location)

training data will be uploaded to: s3://sagemaker-ps-01/kmeans_highlevel_example/data training artifacts will be uploaded to: s3://sagemaker-ps-01/kmeans_highlevel_example/output

In [8]:

%%time

kmeans.fit(kmeans.record_set(train_set[0]))